mirror of https://github.com/mudler/LocalAI.git

docs/examples: enhancements (#1572)

* docs: re-order sections * fix references * Add mixtral-instruct, tinyllama-chat, dolphin-2.5-mixtral-8x7b * Fix link * Minor corrections * fix: models is a StringSlice, not a String Signed-off-by: Ettore Di Giacinto <mudler@localai.io> * WIP: switch docs theme * content * Fix GH link * enhancements * enhancements * Fixed how to link Signed-off-by: lunamidori5 <118759930+lunamidori5@users.noreply.github.com> * fixups * logo fix * more fixups * final touches --------- Signed-off-by: Ettore Di Giacinto <mudler@localai.io> Signed-off-by: lunamidori5 <118759930+lunamidori5@users.noreply.github.com> Co-authored-by: lunamidori5 <118759930+lunamidori5@users.noreply.github.com>

This commit is contained in:

parent

b5c93f176a

commit

6ca4d38a01

|

|

@ -2,9 +2,7 @@

|

|||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: bug

|

||||

assignees: mudler

|

||||

|

||||

labels: bug, unconfirmed, up-for-grabs

|

||||

---

|

||||

|

||||

<!-- Thanks for helping us to improve LocalAI! We welcome all bug reports. Please fill out each area of the template so we can better help you. Comments like this will be hidden when you post but you can delete them if you wish. -->

|

||||

|

|

|

|||

|

|

@ -2,9 +2,7 @@

|

|||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: enhancement

|

||||

assignees: mudler

|

||||

|

||||

labels: enhancement, up-for-grabs

|

||||

---

|

||||

|

||||

<!-- Thanks for helping us to improve LocalAI! We welcome all feature requests. Please fill out each area of the template so we can better help you. Comments like this will be hidden when you post but you can delete them if you wish. -->

|

||||

|

|

|

|||

|

|

@ -1,3 +1,6 @@

|

|||

[submodule "docs/themes/hugo-theme-relearn"]

|

||||

path = docs/themes/hugo-theme-relearn

|

||||

url = https://github.com/McShelby/hugo-theme-relearn.git

|

||||

[submodule "docs/themes/lotusdocs"]

|

||||

path = docs/themes/lotusdocs

|

||||

url = https://github.com/colinwilson/lotusdocs

|

||||

|

|

|

|||

2

LICENSE

2

LICENSE

|

|

@ -1,6 +1,6 @@

|

|||

MIT License

|

||||

|

||||

Copyright (c) 2023 Ettore Di Giacinto

|

||||

Copyright (c) 2023-2024 Ettore Di Giacinto (mudler@localai.io)

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

|

|

|

|||

|

|

@ -0,0 +1,11 @@

|

|||

{

|

||||

"compilerOptions": {

|

||||

"baseUrl": ".",

|

||||

"paths": {

|

||||

"*": [

|

||||

"../../../../.cache/hugo_cache/modules/filecache/modules/pkg/mod/github.com/gohugoio/hugo-mod-jslibs-dist/popperjs/v2@v2.21100.20000/package/dist/cjs/popper.js/*",

|

||||

"../../../../.cache/hugo_cache/modules/filecache/modules/pkg/mod/github.com/twbs/bootstrap@v5.3.2+incompatible/js/*"

|

||||

]

|

||||

}

|

||||

}

|

||||

}

|

||||

296

docs/config.toml

296

docs/config.toml

|

|

@ -1,133 +1,178 @@

|

|||

# this is a required setting for this theme to appear on https://themes.gohugo.io/

|

||||

# change this to a value appropriate for you; if your site is served from a subdirectory

|

||||

# set it like "https://example.com/mysite/"

|

||||

baseURL = "https://localai.io/"

|

||||

languageCode = "en-GB"

|

||||

contentDir = "content"

|

||||

enableEmoji = true

|

||||

enableGitInfo = true # N.B. .GitInfo does not currently function with git submodule content directories

|

||||

|

||||

# canonicalization will only be used for the sitemap.xml and index.xml files;

|

||||

# if set to false, a site served from a subdirectory will generate wrong links

|

||||

# inside of the above mentioned files; if you serve the page from the servers root

|

||||

# you are free to set the value to false as recommended by the official Hugo documentation

|

||||

canonifyURLs = true # true -> all relative URLs would instead be canonicalized using baseURL

|

||||

# required value to serve this page from a webserver AND the file system;

|

||||

# if you don't want to serve your page from the file system, you can also set this value

|

||||

# to false

|

||||

relativeURLs = true # true -> rewrite all relative URLs to be relative to the current content

|

||||

# if you set uglyURLs to false, this theme will append 'index.html' to any branch bundle link

|

||||

# so your page can be also served from the file system; if you don't want that,

|

||||

# set disableExplicitIndexURLs=true in the [params] section

|

||||

uglyURLs = false # true -> basic/index.html -> basic.html

|

||||

defaultContentLanguage = 'en'

|

||||

|

||||

# the directory where Hugo reads the themes from; this is specific to your

|

||||

# installation and most certainly needs be deleted or changed

|

||||

#themesdir = "../.."

|

||||

# yeah, well, obviously a mandatory setting for your site, if you want to

|

||||

# use this theme ;-)

|

||||

theme = "hugo-theme-relearn"

|

||||

|

||||

# the main language of this site; also an automatic pirrrate translation is

|

||||

# available in this showcase

|

||||

languageCode = "en"

|

||||

|

||||

# make sure your defaultContentLanguage is the first one in the [languages]

|

||||

# array below, as the theme needs to make assumptions on it

|

||||

defaultContentLanguage = "en"

|

||||

|

||||

# the site's title of this showcase; you should change this ;-)

|

||||

title = "LocalAI Documentation"

|

||||

|

||||

# We disable this for testing the exampleSite; you must do so too

|

||||

# if you want to use the themes parameter disableGeneratorVersion=true;

|

||||

# otherwise Hugo will create a generator tag on your home page

|

||||

disableHugoGeneratorInject = true

|

||||

|

||||

[outputs]

|

||||

# add JSON to the home to support Lunr search; This is a mandatory setting

|

||||

# for the search functionality

|

||||

# add PRINT to home, section and page to activate the feature to print whole

|

||||

# chapters

|

||||

home = ["HTML", "RSS", "PRINT", "SEARCH", "SEARCHPAGE"]

|

||||

section = ["HTML", "RSS", "PRINT"]

|

||||

page = ["HTML", "RSS", "PRINT"]

|

||||

|

||||

[markup]

|

||||

[markup.highlight]

|

||||

# if `guessSyntax = true`, there will be no unstyled code even if no language

|

||||

# was given BUT Mermaid and Math codefences will not work anymore! So this is a

|

||||

# mandatory setting for your site if you want to use Mermaid or Math codefences

|

||||

guessSyntax = true

|

||||

defaultMarkdownHandler = "goldmark"

|

||||

[markup.tableOfContents]

|

||||

endLevel = 3

|

||||

startLevel = 1

|

||||

[markup.goldmark]

|

||||

[markup.goldmark.renderer]

|

||||

unsafe = true # https://jdhao.github.io/2019/12/29/hugo_html_not_shown/

|

||||

# [markup.highlight]

|

||||

# codeFences = false # disables Hugo's default syntax highlighting

|

||||

# [markup.goldmark.parser]

|

||||

# [markup.goldmark.parser.attribute]

|

||||

# block = true

|

||||

# title = true

|

||||

|

||||

# here in this showcase we use our own modified chroma syntax highlightning style

|

||||

# which is imported in theme-relearn-light.css / theme-relearn-dark.css;

|

||||

# if you want to use a predefined style instead:

|

||||

# - remove the following `noClasses`

|

||||

# - set the following `style` to a predefined style name

|

||||

# - remove the `@import` of the self-defined chroma stylesheet from your CSS files

|

||||

# (here eg.: theme-relearn-light.css / theme-relearn-dark.css)

|

||||

noClasses = false

|

||||

style = "tango"

|

||||

|

||||

[markup.goldmark.renderer]

|

||||

# activated for this showcase to use HTML and JavaScript; decide on your own needs;

|

||||

# if in doubt, remove this line

|

||||

unsafe = true

|

||||

|

||||

# allows `hugo server` to display this showcase in IE11; this is used for testing, as we

|

||||

# are still supporting IE11 - although with degraded experience; if you don't care about

|

||||

# `hugo server` or browsers of ancient times, fell free to remove this whole block

|

||||

[server]

|

||||

[[server.headers]]

|

||||

for = "**.html"

|

||||

[server.headers.values]

|

||||

X-UA-Compatible = "IE=edge"

|

||||

[params]

|

||||

|

||||

google_fonts = [

|

||||

["Inter", "300, 400, 600, 700"],

|

||||

["Fira Code", "500, 700"]

|

||||

]

|

||||

|

||||

sans_serif_font = "Inter" # Default is System font

|

||||

secondary_font = "Inter" # Default is System font

|

||||

mono_font = "Fira Code" # Default is System font

|

||||

|

||||

[params.footer]

|

||||

copyright = "© 2023-2024 <a href='https://mudler.pm' target=_blank>Ettore Di Giacinto</a>"

|

||||

version = true # includes git commit info

|

||||

|

||||

[params.social]

|

||||

github = "mudler/LocalAI" # YOUR_GITHUB_ID or YOUR_GITHUB_URL

|

||||

twitter = "LocalAI_API" # YOUR_TWITTER_ID

|

||||

dicord = "uJAeKSAGDy"

|

||||

# instagram = "colinwilson" # YOUR_INSTAGRAM_ID

|

||||

rss = true # show rss icon with link

|

||||

|

||||

[params.docs] # Parameters for the /docs 'template'

|

||||

|

||||

logo = "https://github.com/go-skynet/LocalAI/assets/2420543/0966aa2a-166e-4f99-a3e5-6c915fc997dd"

|

||||

logo_text = "LocalAI"

|

||||

title = "LocalAI documentation" # default html title for documentation pages/sections

|

||||

|

||||

pathName = "docs" # path name for documentation site | default "docs"

|

||||

|

||||

# themeColor = "cyan" # (optional) - Set theme accent colour. Options include: blue (default), green, red, yellow, emerald, cardinal, magenta, cyan

|

||||

|

||||

darkMode = true # enable dark mode option? default false

|

||||

|

||||

prism = true # enable syntax highlighting via Prism

|

||||

|

||||

prismTheme = "solarized-light" # (optional) - Set theme for PrismJS. Options include: lotusdocs (default), solarized-light, twilight, lucario

|

||||

|

||||

# gitinfo

|

||||

repoURL = "https://github.com/mudler/LocalAI" # Git repository URL for your site [support for GitHub, GitLab, and BitBucket]

|

||||

repoBranch = "master"

|

||||

editPage = true # enable 'Edit this page' feature - default false

|

||||

lastMod = true # enable 'Last modified' date on pages - default false

|

||||

lastModRelative = true # format 'Last modified' time as relative - default true

|

||||

|

||||

sidebarIcons = true # enable sidebar icons? default false

|

||||

breadcrumbs = true # default is true

|

||||

backToTop = true # enable back-to-top button? default true

|

||||

|

||||

# ToC

|

||||

toc = true # enable table of contents? default is true

|

||||

tocMobile = true # enable table of contents in mobile view? default is true

|

||||

scrollSpy = true # enable scrollspy on ToC? default is true

|

||||

|

||||

# front matter

|

||||

descriptions = true # enable front matter descriptions under content title?

|

||||

titleIcon = true # enable front matter icon title prefix? default is false

|

||||

|

||||

# content navigation

|

||||

navDesc = true # include front matter descriptions in Prev/Next navigation cards

|

||||

navDescTrunc = 30 # Number of characters by which to truncate the Prev/Next descriptions

|

||||

|

||||

listDescTrunc = 100 # Number of characters by which to truncate the list card description

|

||||

|

||||

# Link behaviour

|

||||

intLinkTooltip = true # Enable a tooltip for internal links that displays info about the destination? default false

|

||||

# extLinkNewTab = false # Open external links in a new Tab? default true

|

||||

# logoLinkURL = "" # Set a custom URL destination for the top header logo link.

|

||||

|

||||

[params.flexsearch] # Parameters for FlexSearch

|

||||

enabled = true

|

||||

# tokenize = "full"

|

||||

# optimize = true

|

||||

# cache = 100

|

||||

# minQueryChar = 3 # default is 0 (disabled)

|

||||

# maxResult = 5 # default is 5

|

||||

# searchSectionsIndex = []

|

||||

|

||||

[params.docsearch] # Parameters for DocSearch

|

||||

# appID = "" # Algolia Application ID

|

||||

# apiKey = "" # Algolia Search-Only API (Public) Key

|

||||

# indexName = "" # Index Name to perform search on (or set env variable HUGO_PARAM_DOCSEARCH_indexName)

|

||||

|

||||

[params.analytics] # Parameters for Analytics (Google, Plausible)

|

||||

# plausibleURL = "/docs/s" # (or set via env variable HUGO_PARAM_ANALYTICS_plausibleURL)

|

||||

# plausibleAPI = "/docs/s" # optional - (or set via env variable HUGO_PARAM_ANALYTICS_plausibleAPI)

|

||||

# plausibleDomain = "" # (or set via env variable HUGO_PARAM_ANALYTICS_plausibleDomain)

|

||||

|

||||

# [params.feedback]

|

||||

# enabled = true

|

||||

# emoticonTpl = true

|

||||

# eventDest = ["plausible","google"]

|

||||

# emoticonEventName = "Feedback"

|

||||

# positiveEventName = "Positive Feedback"

|

||||

# negativeEventName = "Negative Feedback"

|

||||

# positiveFormTitle = "What did you like?"

|

||||

# negativeFormTitle = "What went wrong?"

|

||||

# successMsg = "Thank you for helping to improve Lotus Docs' documentation!"

|

||||

# errorMsg = "Sorry! There was an error while attempting to submit your feedback!"

|

||||

# positiveForm = [

|

||||

# ["Accurate", "Accurately describes the feature or option."],

|

||||

# ["Solved my problem", "Helped me resolve an issue."],

|

||||

# ["Easy to understand", "Easy to follow and comprehend."],

|

||||

# ["Something else"]

|

||||

# ]

|

||||

# negativeForm = [

|

||||

# ["Inaccurate", "Doesn't accurately describe the feature or option."],

|

||||

# ["Couldn't find what I was looking for", "Missing important information."],

|

||||

# ["Hard to understand", "Too complicated or unclear."],

|

||||

# ["Code sample errors", "One or more code samples are incorrect."],

|

||||

# ["Something else"]

|

||||

# ]

|

||||

|

||||

[menu]

|

||||

[[menu.primary]]

|

||||

name = "Docs"

|

||||

url = "docs/"

|

||||

identifier = "docs"

|

||||

weight = 10

|

||||

[[menu.primary]]

|

||||

name = "Discord"

|

||||

url = "https://discord.gg/uJAeKSAGDy"

|

||||

identifier = "discord"

|

||||

weight = 20

|

||||

|

||||

# showcase of the menu shortcuts; you can use relative URLs linking

|

||||

# to your content or use fully-quallified URLs to link outside of

|

||||

# your project

|

||||

[languages]

|

||||

[languages.en]

|

||||

title = "LocalAI documentation"

|

||||

weight = 1

|

||||

languageName = "English"

|

||||

[languages.en.params]

|

||||

landingPageName = "<i class='fas fa-home'></i> Home"

|

||||

[[languages.en.menu.shortcuts]]

|

||||

name = "<i class='fas fa-home'></i> Home"

|

||||

url = "/"

|

||||

weight = 1

|

||||

[[languages.en.menu.shortcuts]]

|

||||

name = "<i class='fab fa-fw fa-github'></i> GitHub repo"

|

||||

identifier = "ds"

|

||||

url = "https://github.com/go-skynet/LocalAI"

|

||||

weight = 10

|

||||

# [languages.fr]

|

||||

# title = "LocalAI documentation"

|

||||

# languageName = "Français"

|

||||

# contentDir = "content/fr"

|

||||

# weight = 20

|

||||

# [languages.de]

|

||||

# title = "LocalAI documentation"

|

||||

# languageName = "Deutsch"

|

||||

# contentDir = "content/de"

|

||||

# weight = 30

|

||||

|

||||

[[languages.en.menu.shortcuts]]

|

||||

name = "<i class='fas fa-fw fa-camera'></i> Examples"

|

||||

url = "https://github.com/go-skynet/LocalAI/tree/master/examples/"

|

||||

weight = 11

|

||||

|

||||

[[languages.en.menu.shortcuts]]

|

||||

name = "<i class='fas fa-fw fa-images'></i> Model Gallery"

|

||||

url = "https://github.com/go-skynet/model-gallery"

|

||||

weight = 12

|

||||

|

||||

[[languages.en.menu.shortcuts]]

|

||||

name = "<i class='fas fa-fw fa-download'></i> Container images"

|

||||

url = "https://quay.io/repository/go-skynet/local-ai"

|

||||

weight = 20

|

||||

#[[languages.en.menu.shortcuts]]

|

||||

# name = "<i class='fas fa-fw fa-bullhorn'></i> Credits"

|

||||

# url = "more/credits/"

|

||||

# weight = 30

|

||||

|

||||

[[languages.en.menu.shortcuts]]

|

||||

name = "<i class='fas fa-fw fa-tags'></i> Releases"

|

||||

url = "https://github.com/go-skynet/LocalAI/releases"

|

||||

weight = 40

|

||||

|

||||

|

||||

# mounts are only needed in this showcase to access the publicly available screenshots;

|

||||

# remove this section if you don't need further mounts

|

||||

[module]

|

||||

replacements = "github.com/colinwilson/lotusdocs -> lotusdocs"

|

||||

[[module.mounts]]

|

||||

source = 'archetypes'

|

||||

target = 'archetypes'

|

||||

|

|

@ -152,30 +197,11 @@ disableHugoGeneratorInject = true

|

|||

[[module.mounts]]

|

||||

source = 'static'

|

||||

target = 'static'

|

||||

|

||||

|

||||

# settings specific to this theme's features; choose to your likings and

|

||||

# consult this documentation for explaination

|

||||

[params]

|

||||

editURL = "https://github.com/mudler/LocalAI/edit/master/docs/content/"

|

||||

description = "Documentation for LocalAI"

|

||||

author = "Ettore Di Giacinto"

|

||||

showVisitedLinks = true

|

||||

collapsibleMenu = true

|

||||

disableBreadcrumb = false

|

||||

disableInlineCopyToClipBoard = true

|

||||

disableNextPrev = false

|

||||

disableLandingPageButton = true

|

||||

breadcrumbSeparator = ">"

|

||||

titleSeparator = "::"

|

||||

themeVariant = [ "auto", "relearn-bright", "relearn-light", "relearn-dark", "learn", "neon", "blue", "green", "red" ]

|

||||

themeVariantAuto = [ "relearn-light", "relearn-dark" ]

|

||||

disableSeoHiddenPages = true

|

||||

# this is to index search for your native language in other languages, too (eg.

|

||||

# pir in this showcase)

|

||||

additionalContentLanguage = [ "en" ]

|

||||

# this is for the stylesheet generator to allow for interactivity in Mermaid

|

||||

# graphs; you usually will not need it and you should remove this for

|

||||

# security reasons

|

||||

mermaidInitialize = "{ \"securityLevel\": \"loose\" }"

|

||||

mermaidZoom = true

|

||||

# uncomment line below for temporary local development of module

|

||||

# or when using a 'theme' as a git submodule

|

||||

[[module.imports]]

|

||||

path = "github.com/colinwilson/lotusdocs"

|

||||

disable = false

|

||||

[[module.imports]]

|

||||

path = "github.com/gohugoio/hugo-mod-bootstrap-scss/v5"

|

||||

disable = false

|

||||

|

|

|

|||

|

|

@ -1,37 +0,0 @@

|

|||

|

||||

+++

|

||||

disableToc = false

|

||||

title = "Development documentation"

|

||||

weight = 7

|

||||

+++

|

||||

|

||||

{{% notice note %}}

|

||||

|

||||

This section is for developers and contributors. If you are looking for the user documentation, this is not the right place!

|

||||

|

||||

{{% /notice %}}

|

||||

|

||||

This section will collect how-to, notes and development documentation

|

||||

|

||||

## Contributing

|

||||

|

||||

We use conventional commits and semantic versioning. Please follow the [conventional commits](https://www.conventionalcommits.org/en/v1.0.0/) specification when writing commit messages.

|

||||

|

||||

## Creating a gRPC backend

|

||||

|

||||

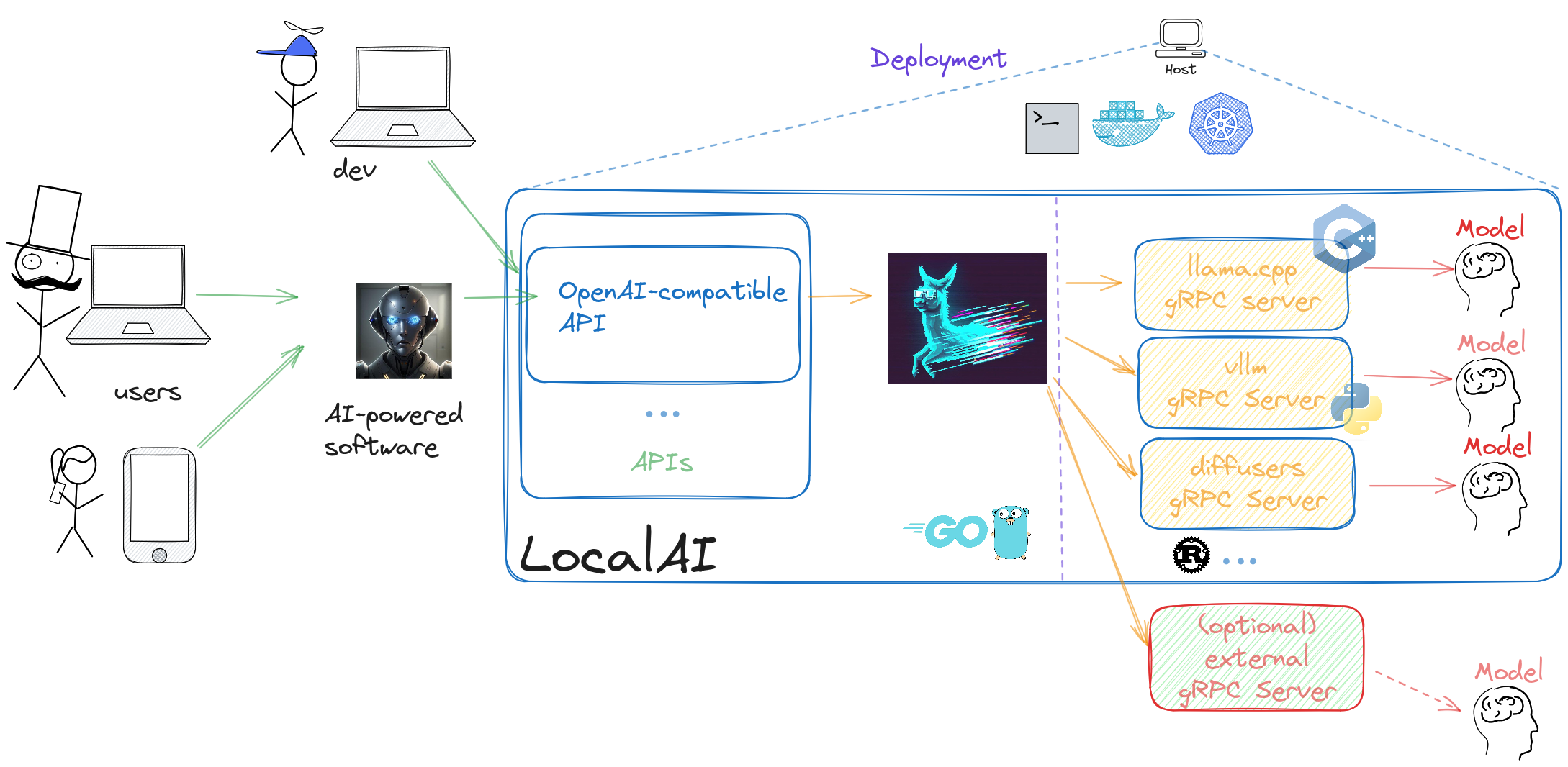

LocalAI backends are `gRPC` servers.

|

||||

|

||||

In order to create a new backend you need:

|

||||

|

||||

- If there are changes required to the protobuf code, modify the [proto](https://github.com/go-skynet/LocalAI/blob/master/pkg/grpc/proto/backend.proto) file and re-generate the code with `make protogen`.

|

||||

- Modify the `Makefile` to add your new backend and re-generate the client code with `make protogen` if necessary.

|

||||

- Create a new `gRPC` server in `extra/grpc` if it's not written in go: [link](https://github.com/go-skynet/LocalAI/tree/master/extra/grpc), and create the specific implementation.

|

||||

- Golang `gRPC` servers should be added in the [pkg/backend](https://github.com/go-skynet/LocalAI/tree/master/pkg/backend) directory given their type. See [piper](https://github.com/go-skynet/LocalAI/blob/master/pkg/backend/tts/piper.go) as an example.

|

||||

- Golang servers needs a respective `cmd/grpc` binary that must be created too, see also [cmd/grpc/piper](https://github.com/go-skynet/LocalAI/tree/master/cmd/grpc/piper) as an example, update also the Makefile accordingly to build the binary during build time.

|

||||

- Update the Dockerfile: if the backend is written in another language, update the `Dockerfile` default *EXTERNAL_GRPC_BACKENDS* variable by listing the new binary [link](https://github.com/go-skynet/LocalAI/blob/c2233648164f67cdb74dd33b8d46244e14436ab3/Dockerfile#L14).

|

||||

|

||||

Once you are done, you can either re-build `LocalAI` with your backend or you can try it out by running the `gRPC` server manually and specifying the host and IP to LocalAI with `--external-grpc-backends` or using (`EXTERNAL_GRPC_BACKENDS` environment variable, comma separated list of `name:host:port` tuples, e.g. `my-awesome-backend:host:port`):

|

||||

|

||||

```bash

|

||||

./local-ai --debug --external-grpc-backends "my-awesome-backend:host:port" ...

|

||||

```

|

||||

|

|

@ -0,0 +1,11 @@

|

|||

---

|

||||

weight: 20

|

||||

title: "Advanced"

|

||||

description: "Advanced usage"

|

||||

icon: science

|

||||

lead: ""

|

||||

date: 2020-10-06T08:49:15+00:00

|

||||

lastmod: 2020-10-06T08:49:15+00:00

|

||||

draft: false

|

||||

images: []

|

||||

---

|

||||

|

|

@ -1,8 +1,9 @@

|

|||

|

||||

+++

|

||||

disableToc = false

|

||||

title = "Advanced"

|

||||

weight = 6

|

||||

title = "Advanced usage"

|

||||

weight = 21

|

||||

url = '/advanced'

|

||||

+++

|

||||

|

||||

### Advanced configuration with YAML files

|

||||

|

|

@ -309,7 +310,7 @@ prompt_cache_all: true

|

|||

|

||||

By default LocalAI will try to autoload the model by trying all the backends. This might work for most of models, but some of the backends are NOT configured to autoload.

|

||||

|

||||

The available backends are listed in the [model compatibility table]({{%relref "model-compatibility" %}}).

|

||||

The available backends are listed in the [model compatibility table]({{%relref "docs/reference/compatibility-table" %}}).

|

||||

|

||||

In order to specify a backend for your models, create a model config file in your `models` directory specifying the backend:

|

||||

|

||||

|

|

@ -343,6 +344,19 @@ Or a remote URI:

|

|||

./local-ai --debug --external-grpc-backends "my-awesome-backend:host:port"

|

||||

```

|

||||

|

||||

For example, to start vllm manually after compiling LocalAI (also assuming running the command from the root of the repository):

|

||||

|

||||

```bash

|

||||

./local-ai --external-grpc-backends "vllm:$PWD/backend/python/vllm/run.sh"

|

||||

```

|

||||

|

||||

Note that first is is necessary to create the conda environment with:

|

||||

|

||||

```bash

|

||||

make -C backend/python/vllm

|

||||

```

|

||||

|

||||

|

||||

### Environment variables

|

||||

|

||||

When LocalAI runs in a container,

|

||||

|

|

@ -419,11 +433,11 @@ RUN PATH=$PATH:/opt/conda/bin make -C backend/python/diffusers

|

|||

ENV EXTERNAL_GRPC_BACKENDS="diffusers:/build/backend/python/diffusers/run.sh"

|

||||

```

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

|

||||

You can specify remote external backends or path to local files. The syntax is `backend-name:/path/to/backend` or `backend-name:host:port`.

|

||||

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

#### In runtime

|

||||

|

||||

|

|

@ -2,12 +2,12 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "Fine-tuning LLMs for text generation"

|

||||

weight = 3

|

||||

weight = 22

|

||||

+++

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

Section under construction

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

This section covers how to fine-tune a language model for text generation and consume it in LocalAI.

|

||||

|

||||

|

|

@ -2,7 +2,8 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "FAQ"

|

||||

weight = 9

|

||||

weight = 24

|

||||

icon = "quiz"

|

||||

+++

|

||||

|

||||

## Frequently asked questions

|

||||

|

|

@ -12,25 +13,13 @@ Here are answers to some of the most common questions.

|

|||

|

||||

### How do I get models?

|

||||

|

||||

<details>

|

||||

|

||||

Most gguf-based models should work, but newer models may require additions to the API. If a model doesn't work, please feel free to open up issues. However, be cautious about downloading models from the internet and directly onto your machine, as there may be security vulnerabilities in lama.cpp or ggml that could be maliciously exploited. Some models can be found on Hugging Face: https://huggingface.co/models?search=gguf, or models from gpt4all are compatible too: https://github.com/nomic-ai/gpt4all.

|

||||

|

||||

</details>

|

||||

|

||||

### What's the difference with Serge, or XXX?

|

||||

|

||||

|

||||

<details>

|

||||

|

||||

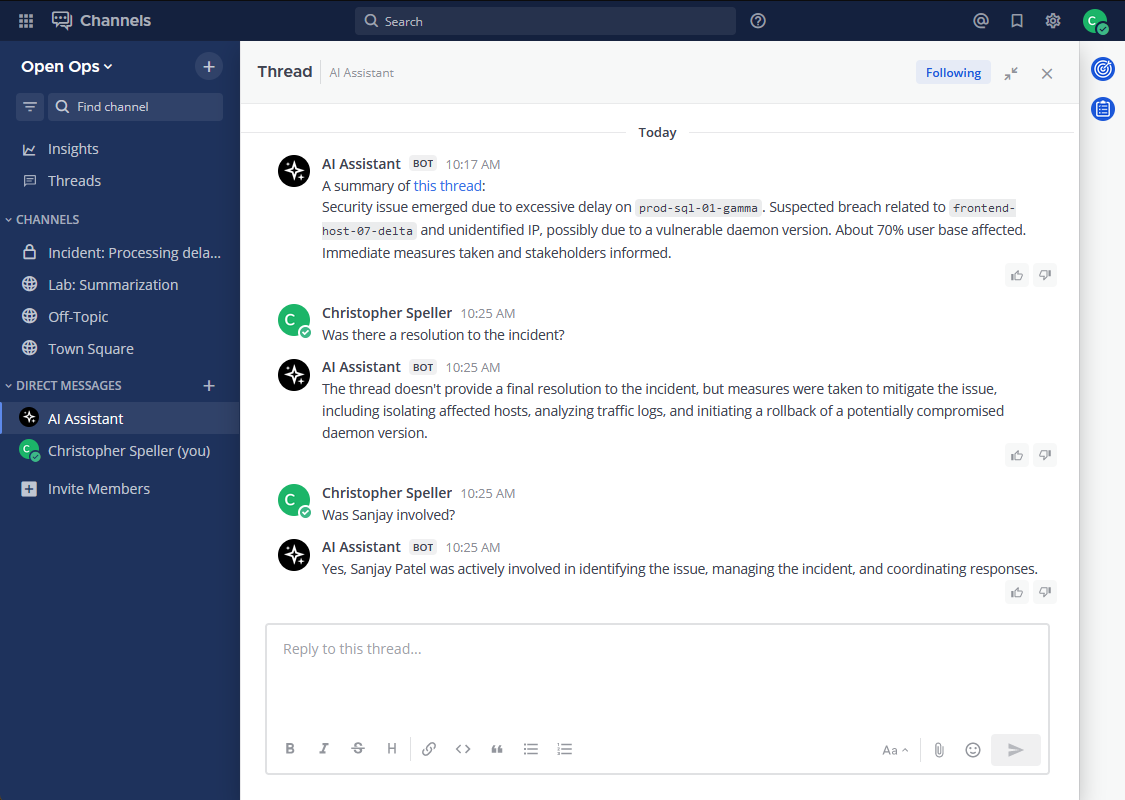

LocalAI is a multi-model solution that doesn't focus on a specific model type (e.g., llama.cpp or alpaca.cpp), and it handles all of these internally for faster inference, easy to set up locally and deploy to Kubernetes.

|

||||

|

||||

</details>

|

||||

|

||||

|

||||

### Everything is slow, how come?

|

||||

|

||||

<details>

|

||||

### Everything is slow, how is it possible?

|

||||

|

||||

There are few situation why this could occur. Some tips are:

|

||||

- Don't use HDD to store your models. Prefer SSD over HDD. In case you are stuck with HDD, disable `mmap` in the model config file so it loads everything in memory.

|

||||

|

|

@ -38,61 +27,31 @@ There are few situation why this could occur. Some tips are:

|

|||

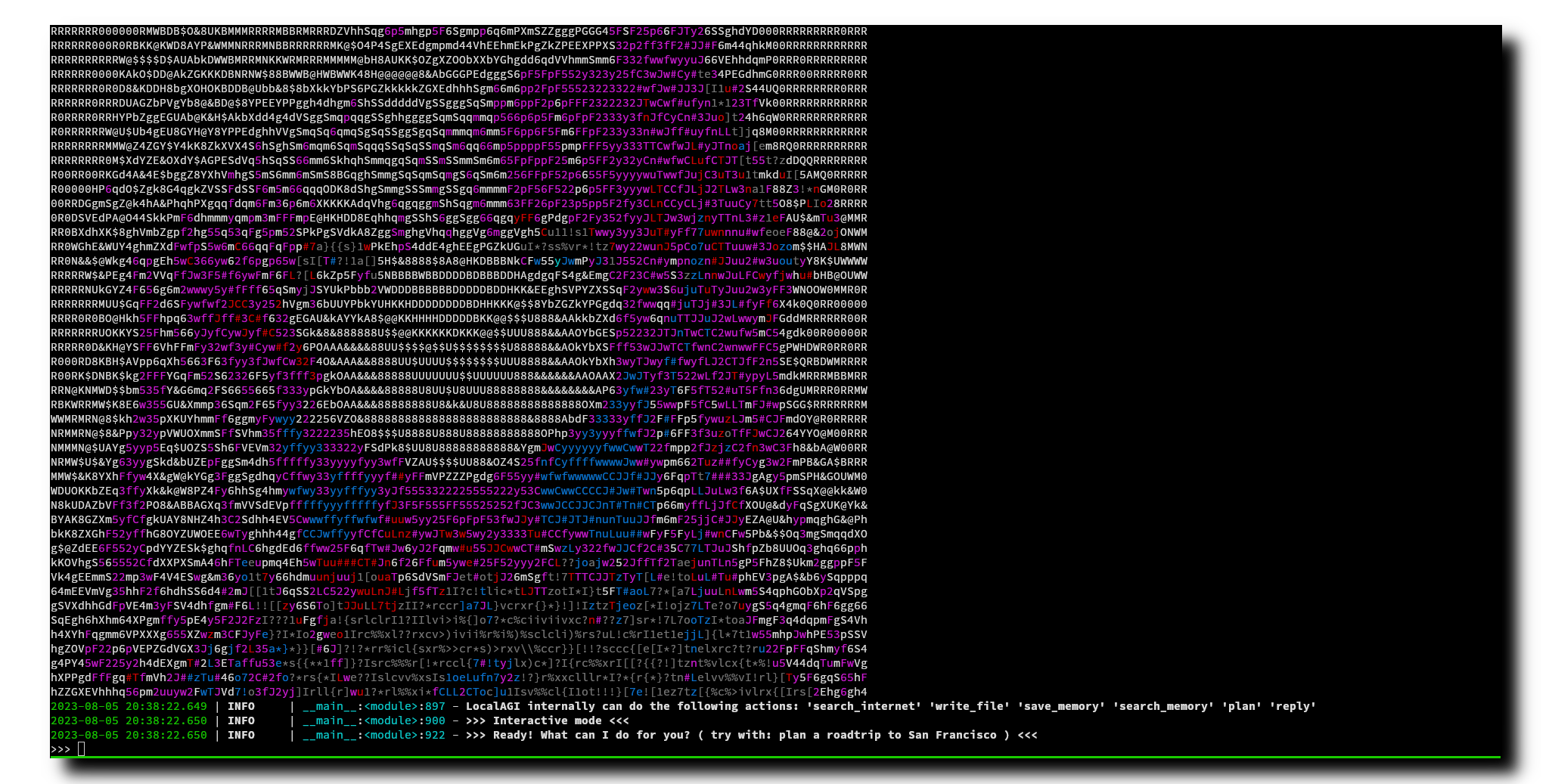

- Run LocalAI with `DEBUG=true`. This gives more information, including stats on the token inference speed.

|

||||

- Check that you are actually getting an output: run a simple curl request with `"stream": true` to see how fast the model is responding.

|

||||

|

||||

</details>

|

||||

|

||||

### Can I use it with a Discord bot, or XXX?

|

||||

|

||||

<details>

|

||||

|

||||

Yes! If the client uses OpenAI and supports setting a different base URL to send requests to, you can use the LocalAI endpoint. This allows to use this with every application that was supposed to work with OpenAI, but without changing the application!

|

||||

|

||||

</details>

|

||||

|

||||

|

||||

### Can this leverage GPUs?

|

||||

|

||||

<details>

|

||||

|

||||

There is partial GPU support, see build instructions above.

|

||||

|

||||

</details>

|

||||

There is GPU support, see {{%relref "docs/features/GPU-acceleration" %}}.

|

||||

|

||||

### Where is the webUI?

|

||||

|

||||

<details>

|

||||

There is the availability of localai-webui and chatbot-ui in the examples section and can be setup as per the instructions. However as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

|

||||

</details>

|

||||

There is the availability of localai-webui and chatbot-ui in the examples section and can be setup as per the instructions. However as LocalAI is an API you can already plug it into existing projects that provides are UI interfaces to OpenAI's APIs. There are several already on Github, and should be compatible with LocalAI already (as it mimics the OpenAI API)

|

||||

|

||||

### Does it work with AutoGPT?

|

||||

|

||||

<details>

|

||||

|

||||

Yes, see the [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/)!

|

||||

|

||||

</details>

|

||||

|

||||

### How can I troubleshoot when something is wrong?

|

||||

|

||||

<details>

|

||||

|

||||

Enable the debug mode by setting `DEBUG=true` in the environment variables. This will give you more information on what's going on.

|

||||

You can also specify `--debug` in the command line.

|

||||

|

||||

</details>

|

||||

|

||||

### I'm getting 'invalid pitch' error when running with CUDA, what's wrong?

|

||||

|

||||

<details>

|

||||

|

||||

This typically happens when your prompt exceeds the context size. Try to reduce the prompt size, or increase the context size.

|

||||

|

||||

</details>

|

||||

|

||||

### I'm getting a 'SIGILL' error, what's wrong?

|

||||

|

||||

<details>

|

||||

|

||||

Your CPU probably does not have support for certain instructions that are compiled by default in the pre-built binaries. If you are running in a container, try setting `REBUILD=true` and disable the CPU instructions that are not compatible with your CPU. For instance: `CMAKE_ARGS="-DLLAMA_F16C=OFF -DLLAMA_AVX512=OFF -DLLAMA_AVX2=OFF -DLLAMA_FMA=OFF" make build`

|

||||

|

||||

</details>

|

||||

Your CPU probably does not have support for certain instructions that are compiled by default in the pre-built binaries. If you are running in a container, try setting `REBUILD=true` and disable the CPU instructions that are not compatible with your CPU. For instance: `CMAKE_ARGS="-DLLAMA_F16C=OFF -DLLAMA_AVX512=OFF -DLLAMA_AVX2=OFF -DLLAMA_FMA=OFF" make build`

|

||||

|

|

@ -1,22 +1,23 @@

|

|||

|

||||

+++

|

||||

disableToc = false

|

||||

title = "⚡ GPU acceleration"

|

||||

weight = 2

|

||||

weight = 9

|

||||

+++

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert context="warning" %}}

|

||||

Section under construction

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

This section contains instruction on how to use LocalAI with GPU acceleration.

|

||||

|

||||

{{% notice note %}}

|

||||

For accelleration for AMD or Metal HW there are no specific container images, see the [build]({{%relref "build/#acceleration" %}})

|

||||

{{% /notice %}}

|

||||

{{% alert icon="⚡" context="warning" %}}

|

||||

For accelleration for AMD or Metal HW there are no specific container images, see the [build]({{%relref "docs/getting-started/build#Acceleration" %}})

|

||||

{{% /alert %}}

|

||||

|

||||

### CUDA(NVIDIA) acceleration

|

||||

|

||||

#### Requirements

|

||||

|

||||

Requirement: nvidia-container-toolkit (installation instructions [1](https://www.server-world.info/en/note?os=Ubuntu_22.04&p=nvidia&f=2) [2](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html))

|

||||

|

||||

To check what CUDA version do you need, you can either run `nvidia-smi` or `nvcc --version`.

|

||||

|

|

@ -0,0 +1,7 @@

|

|||

|

||||

+++

|

||||

disableToc = false

|

||||

title = "Features"

|

||||

weight = 8

|

||||

icon = "feature_search"

|

||||

+++

|

||||

|

|

@ -1,10 +1,12 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "🔈 Audio to text"

|

||||

weight = 2

|

||||

weight = 16

|

||||

+++

|

||||

|

||||

The transcription endpoint allows to convert audio files to text. The endpoint is based on [whisper.cpp](https://github.com/ggerganov/whisper.cpp), a C++ library for audio transcription. The endpoint supports the audio formats supported by `ffmpeg`.

|

||||

Audio to text models are models that can generate text from an audio file.

|

||||

|

||||

The transcription endpoint allows to convert audio files to text. The endpoint is based on [whisper.cpp](https://github.com/ggerganov/whisper.cpp), a C++ library for audio transcription. The endpoint input supports all the audio formats supported by `ffmpeg`.

|

||||

|

||||

## Usage

|

||||

|

||||

|

|

@ -2,20 +2,20 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "✍️ Constrained grammars"

|

||||

weight = 6

|

||||

weight = 15

|

||||

+++

|

||||

|

||||

The chat endpoint accepts an additional `grammar` parameter which takes a [BNF defined grammar](https://en.wikipedia.org/wiki/Backus%E2%80%93Naur_form).

|

||||

|

||||

This allows the LLM to constrain the output to a user-defined schema, allowing to generate `JSON`, `YAML`, and everything that can be defined with a BNF grammar.

|

||||

|

||||

{{% notice note %}}

|

||||

This feature works only with models compatible with the [llama.cpp](https://github.com/ggerganov/llama.cpp) backend (see also [Model compatibility]({{%relref "model-compatibility" %}})). For details on how it works, see the upstream PRs: https://github.com/ggerganov/llama.cpp/pull/1773, https://github.com/ggerganov/llama.cpp/pull/1887

|

||||

{{% /notice %}}

|

||||

{{% alert note %}}

|

||||

This feature works only with models compatible with the [llama.cpp](https://github.com/ggerganov/llama.cpp) backend (see also [Model compatibility]({{%relref "docs/reference/compatibility-table" %}})). For details on how it works, see the upstream PRs: https://github.com/ggerganov/llama.cpp/pull/1773, https://github.com/ggerganov/llama.cpp/pull/1887

|

||||

{{% /alert %}}

|

||||

|

||||

## Setup

|

||||

|

||||

Follow the setup instructions from the [LocalAI functions]({{%relref "features/openai-functions" %}}) page.

|

||||

Follow the setup instructions from the [LocalAI functions]({{%relref "docs/features/openai-functions" %}}) page.

|

||||

|

||||

## 💡 Usage example

|

||||

|

||||

|

|

@ -2,7 +2,7 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "🧠 Embeddings"

|

||||

weight = 2

|

||||

weight = 13

|

||||

+++

|

||||

|

||||

LocalAI supports generating embeddings for text or list of tokens.

|

||||

|

|

@ -73,7 +73,7 @@ parameters:

|

|||

|

||||

The `sentencetransformers` backend uses Python [sentence-transformers](https://github.com/UKPLab/sentence-transformers). For a list of all pre-trained models available see here: https://github.com/UKPLab/sentence-transformers#pre-trained-models

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

|

||||

- The `sentencetransformers` backend is an optional backend of LocalAI and uses Python. If you are running `LocalAI` from the containers you are good to go and should be already configured for use.

|

||||

- If you are running `LocalAI` manually you must install the python dependencies (`make prepare-extra-conda-environments`). This requires `conda` to be installed.

|

||||

|

|

@ -82,7 +82,7 @@ The `sentencetransformers` backend uses Python [sentence-transformers](https://g

|

|||

- The `sentencetransformers` backend does support only embeddings of text, and not of tokens. If you need to embed tokens you can use the `bert` backend or `llama.cpp`.

|

||||

- No models are required to be downloaded before using the `sentencetransformers` backend. The models will be downloaded automatically the first time the API is used.

|

||||

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

## Llama.cpp embeddings

|

||||

|

||||

|

|

@ -2,12 +2,12 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "🆕 GPT Vision"

|

||||

weight = 2

|

||||

weight = 14

|

||||

+++

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

Available only on `master` builds

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

LocalAI supports understanding images by using [LLaVA](https://llava.hliu.cc/), and implements the [GPT Vision API](https://platform.openai.com/docs/guides/vision) from OpenAI.

|

||||

|

||||

|

|

@ -2,13 +2,13 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "🎨 Image generation"

|

||||

weight = 2

|

||||

weight = 12

|

||||

+++

|

||||

|

||||

|

||||

(Generated with [AnimagineXL](https://huggingface.co/Linaqruf/animagine-xl))

|

||||

|

||||

LocalAI supports generating images with Stable diffusion, running on CPU using a C++ implementation, [Stable-Diffusion-NCNN](https://github.com/EdVince/Stable-Diffusion-NCNN) ([binding](https://github.com/mudler/go-stable-diffusion)) and [🧨 Diffusers]({{%relref "model-compatibility/diffusers" %}}).

|

||||

LocalAI supports generating images with Stable diffusion, running on CPU using C++ and Python implementations.

|

||||

|

||||

## Usage

|

||||

|

||||

|

|

@ -35,7 +35,9 @@ curl http://localhost:8080/v1/images/generations -H "Content-Type: application/j

|

|||

}'

|

||||

```

|

||||

|

||||

## stablediffusion-cpp

|

||||

## Backends

|

||||

|

||||

### stablediffusion-cpp

|

||||

|

||||

| mode=0 | mode=1 (winograd/sgemm) |

|

||||

|------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------|

|

||||

|

|

@ -45,7 +47,7 @@ curl http://localhost:8080/v1/images/generations -H "Content-Type: application/j

|

|||

|

||||

Note: image generator supports images up to 512x512. You can use other tools however to upscale the image, for instance: https://github.com/upscayl/upscayl.

|

||||

|

||||

### Setup

|

||||

#### Setup

|

||||

|

||||

Note: In order to use the `images/generation` endpoint with the `stablediffusion` C++ backend, you need to build LocalAI with `GO_TAGS=stablediffusion`. If you are using the container images, it is already enabled.

|

||||

|

||||

|

|

@ -128,11 +130,14 @@ models

|

|||

|

||||

{{< /tabs >}}

|

||||

|

||||

## Diffusers

|

||||

### Diffusers

|

||||

|

||||

This is an extra backend - in the container is already available and there is nothing to do for the setup.

|

||||

[Diffusers](https://huggingface.co/docs/diffusers/index) is the go-to library for state-of-the-art pretrained diffusion models for generating images, audio, and even 3D structures of molecules. LocalAI has a diffusers backend which allows image generation using the `diffusers` library.

|

||||

|

||||

### Model setup

|

||||

|

||||

(Generated with [AnimagineXL](https://huggingface.co/Linaqruf/animagine-xl))

|

||||

|

||||

#### Model setup

|

||||

|

||||

The models will be downloaded the first time you use the backend from `huggingface` automatically.

|

||||

|

||||

|

|

@ -150,3 +155,198 @@ diffusers:

|

|||

cuda: false # Enable for GPU usage (CUDA)

|

||||

scheduler_type: euler_a

|

||||

```

|

||||

|

||||

#### Dependencies

|

||||

|

||||

This is an extra backend - in the container is already available and there is nothing to do for the setup. Do not use *core* images (ending with `-core`). If you are building manually, see the [build instructions]({{%relref "docs/getting-started/build" %}}).

|

||||

|

||||

#### Model setup

|

||||

|

||||

The models will be downloaded the first time you use the backend from `huggingface` automatically.

|

||||

|

||||

Create a model configuration file in the `models` directory, for instance to use `Linaqruf/animagine-xl` with CPU:

|

||||

|

||||

```yaml

|

||||

name: animagine-xl

|

||||

parameters:

|

||||

model: Linaqruf/animagine-xl

|

||||

backend: diffusers

|

||||

cuda: true

|

||||

f16: true

|

||||

diffusers:

|

||||

scheduler_type: euler_a

|

||||

```

|

||||

|

||||

#### Local models

|

||||

|

||||

You can also use local models, or modify some parameters like `clip_skip`, `scheduler_type`, for instance:

|

||||

|

||||

```yaml

|

||||

name: stablediffusion

|

||||

parameters:

|

||||

model: toonyou_beta6.safetensors

|

||||

backend: diffusers

|

||||

step: 30

|

||||

f16: true

|

||||

cuda: true

|

||||

diffusers:

|

||||

pipeline_type: StableDiffusionPipeline

|

||||

enable_parameters: "negative_prompt,num_inference_steps,clip_skip"

|

||||

scheduler_type: "k_dpmpp_sde"

|

||||

cfg_scale: 8

|

||||

clip_skip: 11

|

||||

```

|

||||

|

||||

#### Configuration parameters

|

||||

|

||||

The following parameters are available in the configuration file:

|

||||

|

||||

| Parameter | Description | Default |

|

||||

| --- | --- | --- |

|

||||

| `f16` | Force the usage of `float16` instead of `float32` | `false` |

|

||||

| `step` | Number of steps to run the model for | `30` |

|

||||

| `cuda` | Enable CUDA acceleration | `false` |

|

||||

| `enable_parameters` | Parameters to enable for the model | `negative_prompt,num_inference_steps,clip_skip` |

|

||||

| `scheduler_type` | Scheduler type | `k_dpp_sde` |

|

||||

| `cfg_scale` | Configuration scale | `8` |

|

||||

| `clip_skip` | Clip skip | None |

|

||||

| `pipeline_type` | Pipeline type | `AutoPipelineForText2Image` |

|

||||

|

||||

There are available several types of schedulers:

|

||||

|

||||

| Scheduler | Description |

|

||||

| --- | --- |

|

||||

| `ddim` | DDIM |

|

||||

| `pndm` | PNDM |

|

||||

| `heun` | Heun |

|

||||

| `unipc` | UniPC |

|

||||

| `euler` | Euler |

|

||||

| `euler_a` | Euler a |

|

||||

| `lms` | LMS |

|

||||

| `k_lms` | LMS Karras |

|

||||

| `dpm_2` | DPM2 |

|

||||

| `k_dpm_2` | DPM2 Karras |

|

||||

| `dpm_2_a` | DPM2 a |

|

||||

| `k_dpm_2_a` | DPM2 a Karras |

|

||||

| `dpmpp_2m` | DPM++ 2M |

|

||||

| `k_dpmpp_2m` | DPM++ 2M Karras |

|

||||

| `dpmpp_sde` | DPM++ SDE |

|

||||

| `k_dpmpp_sde` | DPM++ SDE Karras |

|

||||

| `dpmpp_2m_sde` | DPM++ 2M SDE |

|

||||

| `k_dpmpp_2m_sde` | DPM++ 2M SDE Karras |

|

||||

|

||||

Pipelines types available:

|

||||

|

||||

| Pipeline type | Description |

|

||||

| --- | --- |

|

||||

| `StableDiffusionPipeline` | Stable diffusion pipeline |

|

||||

| `StableDiffusionImg2ImgPipeline` | Stable diffusion image to image pipeline |

|

||||

| `StableDiffusionDepth2ImgPipeline` | Stable diffusion depth to image pipeline |

|

||||

| `DiffusionPipeline` | Diffusion pipeline |

|

||||

| `StableDiffusionXLPipeline` | Stable diffusion XL pipeline |

|

||||

|

||||

#### Usage

|

||||

|

||||

#### Text to Image

|

||||

Use the `image` generation endpoint with the `model` name from the configuration file:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/images/generations \

|

||||

-H "Content-Type: application/json" \

|

||||

-d '{

|

||||

"prompt": "<positive prompt>|<negative prompt>",

|

||||

"model": "animagine-xl",

|

||||

"step": 51,

|

||||

"size": "1024x1024"

|

||||

}'

|

||||

```

|

||||

|

||||

#### Image to Image

|

||||

|

||||

https://huggingface.co/docs/diffusers/using-diffusers/img2img

|

||||

|

||||

An example model (GPU):

|

||||

```yaml

|

||||

name: stablediffusion-edit

|

||||

parameters:

|

||||

model: nitrosocke/Ghibli-Diffusion

|

||||

backend: diffusers

|

||||

step: 25

|

||||

cuda: true

|

||||

f16: true

|

||||

diffusers:

|

||||

pipeline_type: StableDiffusionImg2ImgPipeline

|

||||

enable_parameters: "negative_prompt,num_inference_steps,image"

|

||||

```

|

||||

|

||||

```bash

|

||||

IMAGE_PATH=/path/to/your/image

|

||||

(echo -n '{"file": "'; base64 $IMAGE_PATH; echo '", "prompt": "a sky background","size": "512x512","model":"stablediffusion-edit"}') |

|

||||

curl -H "Content-Type: application/json" -d @- http://localhost:8080/v1/images/generations

|

||||

```

|

||||

|

||||

#### Depth to Image

|

||||

|

||||

https://huggingface.co/docs/diffusers/using-diffusers/depth2img

|

||||

|

||||

```yaml

|

||||

name: stablediffusion-depth

|

||||

parameters:

|

||||

model: stabilityai/stable-diffusion-2-depth

|

||||

backend: diffusers

|

||||

step: 50

|

||||

# Force CPU usage

|

||||

f16: true

|

||||

cuda: true

|

||||

diffusers:

|

||||

pipeline_type: StableDiffusionDepth2ImgPipeline

|

||||

enable_parameters: "negative_prompt,num_inference_steps,image"

|

||||

cfg_scale: 6

|

||||

```

|

||||

|

||||

```bash

|

||||

(echo -n '{"file": "'; base64 ~/path/to/image.jpeg; echo '", "prompt": "a sky background","size": "512x512","model":"stablediffusion-depth"}') |

|

||||

curl -H "Content-Type: application/json" -d @- http://localhost:8080/v1/images/generations

|

||||

```

|

||||

|

||||

#### img2vid

|

||||

|

||||

|

||||

```yaml

|

||||

name: img2vid

|

||||

parameters:

|

||||

model: stabilityai/stable-video-diffusion-img2vid

|

||||

backend: diffusers

|

||||

step: 25

|

||||

# Force CPU usage

|

||||

f16: true

|

||||

cuda: true

|

||||

diffusers:

|

||||

pipeline_type: StableVideoDiffusionPipeline

|

||||

```

|

||||

|

||||

```bash

|

||||

(echo -n '{"file": "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/svd/rocket.png?download=true","size": "512x512","model":"img2vid"}') |

|

||||

curl -H "Content-Type: application/json" -X POST -d @- http://localhost:8080/v1/images/generations

|

||||

```

|

||||

|

||||

#### txt2vid

|

||||

|

||||

```yaml

|

||||

name: txt2vid

|

||||

parameters:

|

||||

model: damo-vilab/text-to-video-ms-1.7b

|

||||

backend: diffusers

|

||||

step: 25

|

||||

# Force CPU usage

|

||||

f16: true

|

||||

cuda: true

|

||||

diffusers:

|

||||

pipeline_type: VideoDiffusionPipeline

|

||||

cuda: true

|

||||

```

|

||||

|

||||

```bash

|

||||

(echo -n '{"prompt": "spiderman surfing","size": "512x512","model":"txt2vid"}') |

|

||||

curl -H "Content-Type: application/json" -X POST -d @- http://localhost:8080/v1/images/generations

|

||||

```

|

||||

|

|

@ -2,7 +2,9 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "🖼️ Model gallery"

|

||||

weight = 7

|

||||

|

||||

weight = 18

|

||||

url = '/models'

|

||||

+++

|

||||

|

||||

<h1 align="center">

|

||||

|

|

@ -15,13 +17,13 @@ The model gallery is a (experimental!) collection of models configurations for [

|

|||

|

||||

LocalAI to ease out installations of models provide a way to preload models on start and downloading and installing them in runtime. You can install models manually by copying them over the `models` directory, or use the API to configure, download and verify the model assets for you. As the UI is still a work in progress, you will find here the documentation about the API Endpoints.

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

The models in this gallery are not directly maintained by LocalAI. If you find a model that is not working, please open an issue on the model gallery repository.

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

GPT and text generation models might have a license which is not permissive for commercial use or might be questionable or without any license at all. Please check the model license before using it. The official gallery contains only open licensed models.

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

## Useful Links and resources

|

||||

|

||||

|

|

@ -48,7 +50,7 @@ GALLERIES=[{"name":"model-gallery", "url":"github:go-skynet/model-gallery/index.

|

|||

|

||||

where `github:go-skynet/model-gallery/index.yaml` will be expanded automatically to `https://raw.githubusercontent.com/go-skynet/model-gallery/main/index.yaml`.

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

|

||||

As this feature is experimental, you need to run `local-ai` with a list of `GALLERIES`. Currently there are two galleries:

|

||||

|

||||

|

|

@ -63,19 +65,19 @@ GALLERIES=[{"name":"model-gallery", "url":"github:go-skynet/model-gallery/index.

|

|||

|

||||

If running with `docker-compose`, simply edit the `.env` file and uncomment the `GALLERIES` variable, and add the one you want to use.

|

||||

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

You might not find all the models in this gallery. Automated CI updates the gallery automatically. You can find however most of the models on huggingface (https://huggingface.co/), generally it should be available `~24h` after upload.

|

||||

|

||||

By under any circumstances LocalAI and any developer is not responsible for the models in this gallery, as CI is just indexing them and providing a convenient way to install with an automatic configuration with a consistent API. Don't install models from authors you don't trust, and, check the appropriate license for your use case. Models are automatically indexed and hosted on huggingface (https://huggingface.co/). For any issue with the models, please open an issue on the model gallery repository if it's a LocalAI misconfiguration, otherwise refer to the huggingface repository. If you think a model should not be listed, please reach to us and we will remove it from the gallery.

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

|

||||

There is no documentation yet on how to build a gallery or a repository - but you can find an example in the [model-gallery](https://github.com/go-skynet/model-gallery) repository.

|

||||

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

|

||||

### List Models

|

||||

|

|

@ -117,7 +119,7 @@ where:

|

|||

- `bert-embeddings` is the model name in the gallery

|

||||

(read its [config here](https://github.com/go-skynet/model-gallery/blob/main/bert-embeddings.yaml)).

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

If the `huggingface` model gallery is enabled (it's enabled by default),

|

||||

and the model has an entry in the model gallery's associated YAML config

|

||||

(for `huggingface`, see [`model-gallery/huggingface.yaml`](https://github.com/go-skynet/model-gallery/blob/main/huggingface.yaml)),

|

||||

|

|

@ -132,7 +134,7 @@ curl $LOCALAI/models/apply -H "Content-Type: application/json" -d '{

|

|||

```

|

||||

|

||||

Note that the `id` can be used similarly when pre-loading models at start.

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

|

||||

## How to install a model (without a gallery)

|

||||

|

|

@ -217,7 +219,7 @@ YAML:

|

|||

|

||||

</details>

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

|

||||

You can find already some open licensed models in the [model gallery](https://github.com/go-skynet/model-gallery).

|

||||

|

||||

|

|

@ -241,7 +243,7 @@ curl $LOCALAI/models/apply -H "Content-Type: application/json" -d '{

|

|||

|

||||

</details>

|

||||

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

## Installing a model with a different name

|

||||

|

||||

|

|

@ -2,7 +2,7 @@

|

|||

+++

|

||||

disableToc = false

|

||||

title = "🔥 OpenAI functions"

|

||||

weight = 2

|

||||

weight = 17

|

||||

+++

|

||||

|

||||

LocalAI supports running OpenAI functions with `llama.cpp` compatible models.

|

||||

|

|

@ -67,13 +67,13 @@ response = openai.ChatCompletion.create(

|

|||

# ...

|

||||

```

|

||||

|

||||

{{% notice note %}}

|

||||

{{% alert note %}}

|

||||

When running the python script, be sure to:

|

||||

|

||||

- Set `OPENAI_API_KEY` environment variable to a random string (the OpenAI api key is NOT required!)

|

||||

- Set `OPENAI_API_BASE` to point to your LocalAI service, for example `OPENAI_API_BASE=http://localhost:8080`

|

||||

|

||||

{{% /notice %}}

|

||||

{{% /alert %}}

|

||||

|

||||

## Advanced

|

||||

|

||||

|

|

@ -0,0 +1,263 @@

|

|||

|

||||

+++

|

||||

disableToc = false

|

||||

title = "📖 Text generation (GPT)"

|

||||

weight = 10

|

||||

+++

|

||||

|

||||

LocalAI supports generating text with GPT with `llama.cpp` and other backends (such as `rwkv.cpp` as ) see also the [Model compatibility]({{%relref "docs/reference/compatibility-table" %}}) for an up-to-date list of the supported model families.

|

||||

|

||||

Note:

|

||||

|

||||

- You can also specify the model name as part of the OpenAI token.

|

||||

- If only one model is available, the API will use it for all the requests.

|

||||

|

||||

## API Reference

|

||||

|

||||

### Chat completions

|

||||

|

||||

https://platform.openai.com/docs/api-reference/chat

|

||||

|

||||

For example, to generate a chat completion, you can send a POST request to the `/v1/chat/completions` endpoint with the instruction as the request body:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -d '{

|

||||

"model": "ggml-koala-7b-model-q4_0-r2.bin",

|

||||

"messages": [{"role": "user", "content": "Say this is a test!"}],

|

||||

"temperature": 0.7

|

||||

}'

|

||||

```

|

||||

|

||||

Available additional parameters: `top_p`, `top_k`, `max_tokens`

|

||||

|

||||

### Edit completions

|

||||

|

||||

https://platform.openai.com/docs/api-reference/edits

|

||||

|

||||

To generate an edit completion you can send a POST request to the `/v1/edits` endpoint with the instruction as the request body:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/edits -H "Content-Type: application/json" -d '{

|

||||

"model": "ggml-koala-7b-model-q4_0-r2.bin",

|

||||

"instruction": "rephrase",

|

||||

"input": "Black cat jumped out of the window",

|

||||

"temperature": 0.7

|

||||

}'

|

||||

```

|

||||

|

||||

Available additional parameters: `top_p`, `top_k`, `max_tokens`.

|

||||

|

||||

### Completions

|

||||

|

||||

https://platform.openai.com/docs/api-reference/completions

|

||||

|

||||

To generate a completion, you can send a POST request to the `/v1/completions` endpoint with the instruction as per the request body:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/completions -H "Content-Type: application/json" -d '{

|

||||

"model": "ggml-koala-7b-model-q4_0-r2.bin",

|

||||

"prompt": "A long time ago in a galaxy far, far away",

|

||||

"temperature": 0.7

|

||||

}'

|

||||

```

|

||||

|

||||

Available additional parameters: `top_p`, `top_k`, `max_tokens`

|

||||

|

||||

### List models

|

||||

|

||||

You can list all the models available with:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/models

|

||||

```

|

||||

|

||||

## Backends

|

||||

|

||||

### AutoGPTQ

|

||||

|

||||

[AutoGPTQ](https://github.com/PanQiWei/AutoGPTQ) is an easy-to-use LLMs quantization package with user-friendly apis, based on GPTQ algorithm.

|

||||

|

||||

#### Prerequisites

|

||||

|

||||

This is an extra backend - in the container images is already available and there is nothing to do for the setup.

|

||||

|

||||

If you are building LocalAI locally, you need to install [AutoGPTQ manually](https://github.com/PanQiWei/AutoGPTQ#quick-installation).

|

||||

|

||||

|

||||

#### Model setup

|

||||

|

||||

The models are automatically downloaded from `huggingface` if not present the first time. It is possible to define models via `YAML` config file, or just by querying the endpoint with the `huggingface` repository model name. For example, create a `YAML` config file in `models/`:

|

||||

|

||||

```

|

||||

name: orca

|

||||

backend: autogptq

|

||||

model_base_name: "orca_mini_v2_13b-GPTQ-4bit-128g.no-act.order"

|

||||

parameters:

|

||||

model: "TheBloke/orca_mini_v2_13b-GPTQ"

|

||||

# ...

|

||||

```

|

||||

|

||||

Test with:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -d '{

|

||||

"model": "orca",

|

||||

"messages": [{"role": "user", "content": "How are you?"}],

|

||||

"temperature": 0.1

|

||||

}'

|

||||

```

|

||||

### RWKV

|

||||

|

||||

A full example on how to run a rwkv model is in the [examples](https://github.com/go-skynet/LocalAI/tree/master/examples/rwkv).

|

||||

|

||||

Note: rwkv models needs to specify the backend `rwkv` in the YAML config files and have an associated tokenizer along that needs to be provided with it:

|

||||

|

||||

```

|

||||

36464540 -rw-r--r-- 1 mudler mudler 1.2G May 3 10:51 rwkv_small

|

||||

36464543 -rw-r--r-- 1 mudler mudler 2.4M May 3 10:51 rwkv_small.tokenizer.json

|

||||

```

|

||||

|

||||

### llama.cpp

|

||||

|

||||

[llama.cpp](https://github.com/ggerganov/llama.cpp) is a popular port of Facebook's LLaMA model in C/C++.

|

||||

|

||||

{{% alert note %}}

|

||||

|

||||

The `ggml` file format has been deprecated. If you are using `ggml` models and you are configuring your model with a YAML file, specify, use the `llama-ggml` backend instead. If you are relying in automatic detection of the model, you should be fine. For `gguf` models, use the `llama` backend. The go backend is deprecated as well but still available as `go-llama`. The go backend supports still features not available in the mainline: speculative sampling and embeddings.

|

||||

|

||||

{{% /alert %}}

|

||||

|

||||

#### Features

|

||||

|

||||

The `llama.cpp` model supports the following features:

|

||||

- [📖 Text generation (GPT)]({{%relref "docs/features/text-generation" %}})

|

||||

- [🧠 Embeddings]({{%relref "docs/features/embeddings" %}})

|

||||

- [🔥 OpenAI functions]({{%relref "docs/features/openai-functions" %}})

|

||||

- [✍️ Constrained grammars]({{%relref "docs/features/constrained_grammars" %}})

|

||||

|

||||

#### Setup

|

||||

|

||||

LocalAI supports `llama.cpp` models out of the box. You can use the `llama.cpp` model in the same way as any other model.

|

||||

|

||||

##### Manual setup

|

||||

|

||||

It is sufficient to copy the `ggml` or `gguf` model files in the `models` folder. You can refer to the model in the `model` parameter in the API calls.

|

||||

|

||||

[You can optionally create an associated YAML]({{%relref "docs/advanced" %}}) model config file to tune the model's parameters or apply a template to the prompt.

|

||||

|

||||

Prompt templates are useful for models that are fine-tuned towards a specific prompt.

|

||||

|

||||

##### Automatic setup

|

||||

|

||||

LocalAI supports model galleries which are indexes of models. For instance, the huggingface gallery contains a large curated index of models from the huggingface model hub for `ggml` or `gguf` models.

|

||||

|

||||

For instance, if you have the galleries enabled and LocalAI already running, you can just start chatting with models in huggingface by running:

|

||||

|

||||

```bash

|

||||

curl http://localhost:8080/v1/chat/completions -H "Content-Type: application/json" -d '{

|

||||

"model": "TheBloke/WizardLM-13B-V1.2-GGML/wizardlm-13b-v1.2.ggmlv3.q2_K.bin",

|

||||

"messages": [{"role": "user", "content": "Say this is a test!"}],

|

||||

"temperature": 0.1

|