6.7 KiB

Examples

| ChatGPT OSS alternative | Image generation |

|---|---|

|

| Telegram bot | Flowise |

|---|---|

Here is a list of projects that can easily be integrated with the LocalAI backend.

Projects

AutoGPT

by @mudler

This example shows how to use AutoGPT with LocalAI.

Chatbot-UI

by @mkellerman

This integration shows how to use LocalAI with mckaywrigley/chatbot-ui.

There is also a separate example to show how to manually setup a model: example

K8sGPT

by @mudler

This example show how to use LocalAI inside Kubernetes with k8sgpt.

Flowise

by @mudler

This example shows how to use FlowiseAI/Flowise with LocalAI.

Discord bot

by @mudler

Run a discord bot which lets you talk directly with a model

Check it out here, or for a live demo you can talk with our bot in #random-bot in our discord server.

Langchain

A ready to use example to show e2e how to integrate LocalAI with langchain

Langchain Python

by @mudler

A ready to use example to show e2e how to integrate LocalAI with langchain

LocalAI functions

by @mudler

A ready to use example to show how to use OpenAI functions with LocalAI

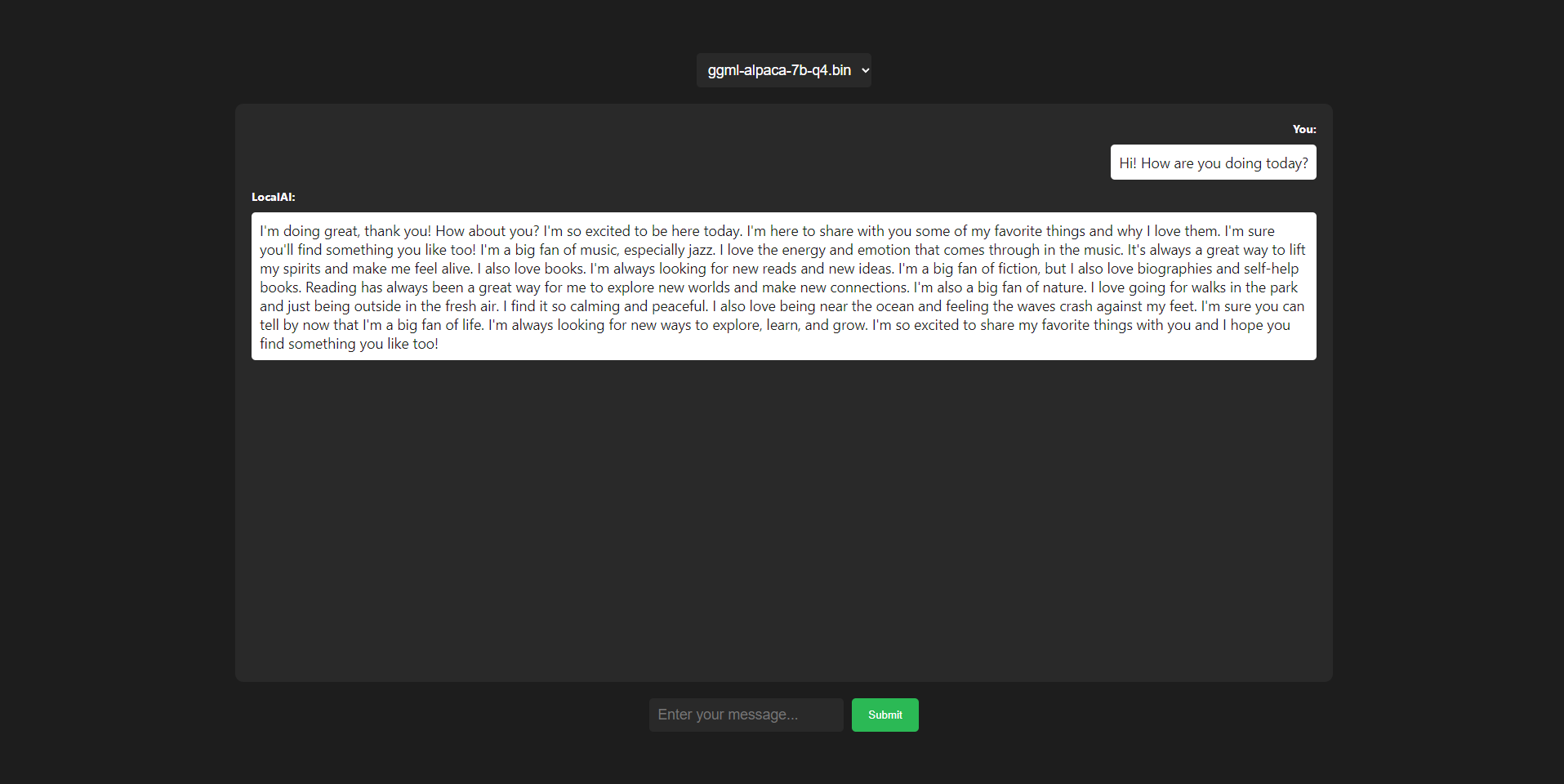

LocalAI WebUI

by @dhruvgera

A light, community-maintained web interface for LocalAI

How to run rwkv models

by @mudler

A full example on how to run RWKV models with LocalAI

PrivateGPT

by @mudler

A full example on how to run PrivateGPT with LocalAI

Slack bot

by @mudler

Run a slack bot which lets you talk directly with a model

Slack bot (Question answering)

by @mudler

Run a slack bot, ideally for teams, which lets you ask questions on a documentation website, or a github repository.

Question answering on documents with llama-index

by @mudler

Shows how to integrate with Llama-Index to enable question answering on a set of documents.

Question answering on documents with langchain and chroma

by @mudler

Shows how to integrate with Langchain and Chroma to enable question answering on a set of documents.

Telegram bot

_by @mudler

Use LocalAI to power a Telegram bot assistant, with Image generation and audio support!

Template for Runpod.io

by @fHachenberg

Allows to run any LocalAI-compatible model as a backend on the servers of https://runpod.io

Want to contribute?

Create an issue, and put Example: <description> in the title! We will post your examples here.